The infrastructure of NHR4CES

A cooperation of TU Darmstadt

and RWTH Aachen University

and RWTH Aachen University

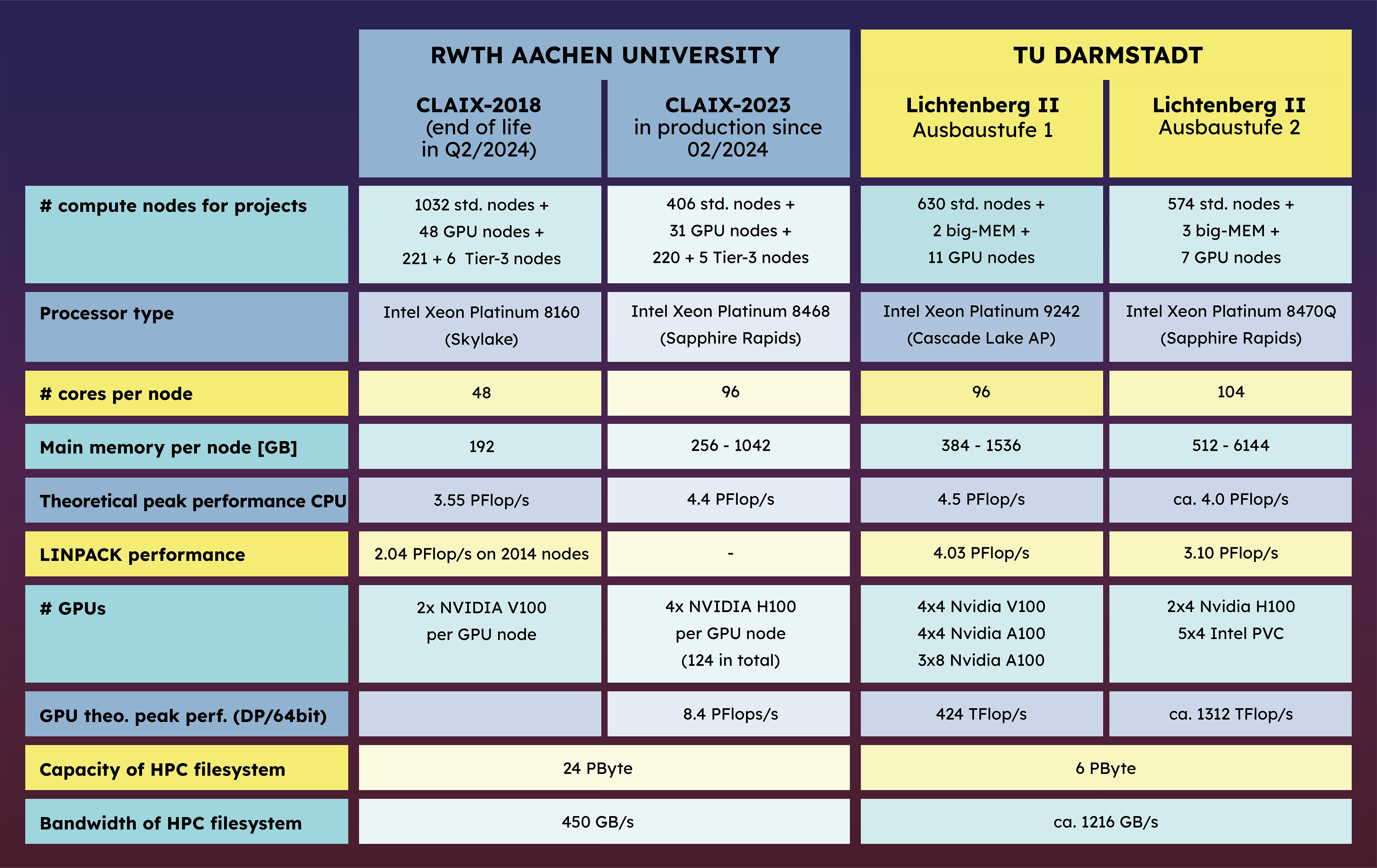

Lichtenberg II and CLAIX-2023

For years, TU Darmstadt and RWTH Aachen University have successfully operated regional Tier-2 computers.

Parts of both systems have been open to academic researchers from all over Germany for years. The goal is to combine HPC applications, algorithms and methods, and the efficient use of HPC hardware.

This creates an infrastructure with which scientists can answer questions of central importance to the economy and society – whether in the field of engineering and materials sciences or engineering-oriented physics, chemistry or medicine.