1. July 2024

CSG Parallelism and Performance

and RWTH Aachen University

Cross-Sectional Group

The CSG Parallelism and Performance supports performance engineering, in particular performance management, modeling, optimization, and parallel programming.

In the foreseeable future, the HPC community is approaching a new era of supercomputing known as exascale, which stands for the ability to execute 1018 floating operations per second. To reach exascale, the design of supercomputers becomes more and more complex and, hence, does the programming. For instance, hardware architectures are increasingly heterogeneous, forcing HPC applications to exploit different levels of parallelism. Ensuring both correctness and performance of applications running on such systems is a non-trivial task, requiring substantial expertise in HPC.

In the CSG Parallelism and Performance, HPC experts from RWTH Aachen University and TU Darmstadt, with Jülich Supercomputing Centre as an associated partner, bundle their expertise to foster the efficient and productive use of HPC current as well as emerging infrastructures by users from a broad spectrum of scientific disciplines. To achieve this goal, we provide user support, offer training, and organize workshops, targeting novice as well as advanced HPC users. In addition, we conduct research related to our service portfolio and, in particular, develop suitable tools.

Competencies

- Performance analysis, modeling, and optimization

- Supporting parallelization and architecture porting

- Correctness analysis

- Enabling new HPC use cases

Service:

- Parallelization support

- Correctness analysis and debugging concurrency errors

- Performance analysis and optimization

- Porting to new architectures / improving (performance) portability

- Enabling new HPC use cases

Research:

- Parallelism discovery

- Data race and deadlock detection

- Performance modeling

- Efficient coupling of AI with classic numerical simulation

Tools:

- Archer (correctness checking for multithreaded programs)

- DiscoPoP (parallelization assistance)

- Extra-P (performance modeling)

- MUST (correctness checking for MPI programs)

Recent training activities:

- PPCES / aiXcelerate (RWTH Aachen)

- ISC/SC Tutorials (PP101, OpenMP, Correctness, Performance)

- HiPerCH (HKHLR)

- RACE Tutorials (OpenMP / MPI)

Gallery

Project partners

Members

Publications

2023

- Satellite Collision Detection using Spatial Data Structures (Christian Hellwig, Fabian Czappa, Martin Michel, Reinhold Bertrand, Felix Wolf), Proc. of the 37th IEEE International Parallel and Distributed Processing Symposium (IPDPS), St. Petersburg, Florida, USA, pages 724–735, IEEE, May 2023.

- Simulating Structural Plasticity of the Brain more Scalable than Expected (Fabian Czappa, Alexander Geiß, Felix Wolf) Journal of Parallel and Distributed Computing, 171:24–27, January 2023.

- Filtering and Ranking of Code Regions for Parallelization via Hotspot Detection and OpenMP Overhead Analysis (Seyed Ali Mohammadi, Lukas Rothenberger, Gustavo de Morais, Bertin Nico Görlich, Erik Lille, Hendrik Rüthers, Felix Wolf) Proc. of the Workshop on Programming and Performance Visualization Tools (ProTools), held in conjunction with the Supercomputing Conference (SC23), Denver, CO, USA, pages 1368–1379, November 2023.

2022

- A Case Study on Coupling OpenFOAM with Different Machine Learning Frameworks(Fabian Orland, Kim Sebastian Brose, Julian Bissantz, Federica Ferraro, Christian Terboven, Christian Hasse), AI4S Workshop @SC22 (accepted: 10.09.2022)

- Accelerating Brain Simulations with the Fast Multipole Method (Hannah Nöttgen, Fabian Czappa, Felix Wolf), Proc. of the 28th Euro-Par Conference 2022: Parallel Processing, Glasgow, UK, volume 13440 of Lecture Notes in Computer Science, pages 387–402, Springer, August 2022.

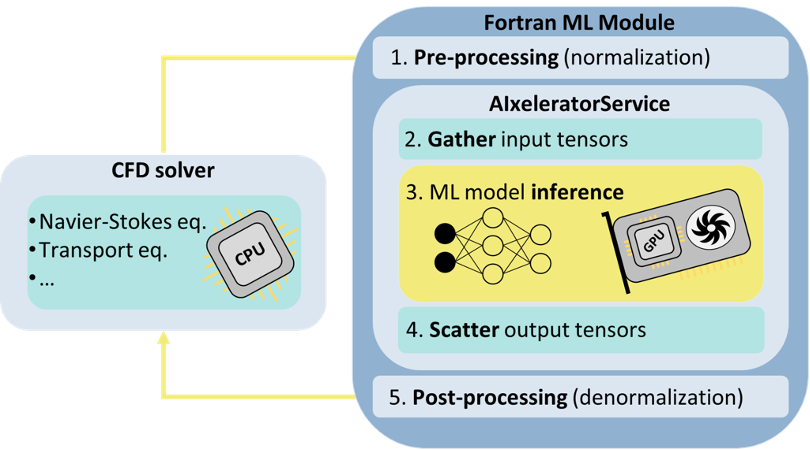

Development of a library to couple traditional numerical solvers (e.g., CFD) with deep learning models on heterogeneous architectures (CPU+GPU) supporting 6 use cases from SDL-EC & SDL-Fluids.

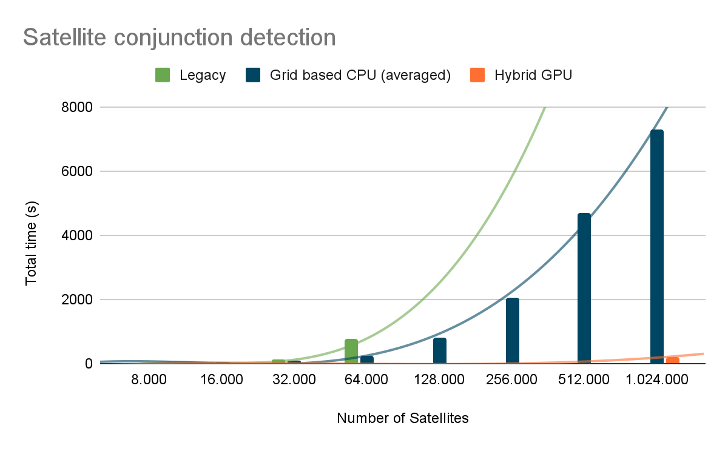

Development of a library to couple traditional numerical solvers (e.g., CFD) with deep learning models on heterogeneous architectures (CPU+GPU) supporting 6 use cases from SDL-EC & SDL-Fluids.  In collaboration with ESA, we developed a new GPU-based algorithm to detect satellite collisions, which can handle an order of magnitude more satellites than the legacy version.

In collaboration with ESA, we developed a new GPU-based algorithm to detect satellite collisions, which can handle an order of magnitude more satellites than the legacy version.